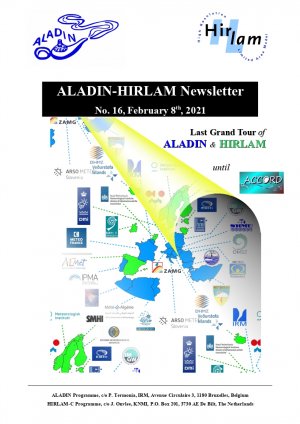

The concept of the ALADIN project was proposed by Météo-France in 1990, with the aim of building a mutually beneficial collaboration with the National Meteorological Services of Central and Eastern Europe. This collaboration was to be in the field of Numerical Weather Prediction (NWP), which provides the basis for the forecasting tools of modern meteorology. The easy to translate acronym (Aire Limitée Adaptation dynamique Développement InterNational) clearly indicates the major axes of this project at its beginnings.

25 years later, as defined in the 5th Memorandum of Understanding,

The goal of the ALADIN Collaboration is to improve the value of the meteorological, hydrological and environmental warning and forecast services delivered by all Members to their users, through the operational implementation of a NWP system capable of resolving horizontal scales from the meso-beta to the meso-gamma scale and improving the prediction of severe weather phenomena such as heavy precipitation, intensive convection and strong winds.

This objective will be fulfilled through continuation and expansion of the activities of the ALADIN Consortium in the field of High Resolution Short Range Weather Forecast, including:

- Maintenance of an ALADIN System (...);

- Joint research and development activities, on the basis of the common Strategic Plan and related Work Plans, with the aim of maintaining the ALADIN System at scientific and technical state of the art level within the NWP community;

- Sharing scientific results, numerical codes, operational environments, related expertise and know-how, as necessary for all ALADIN Consortium members to conduct operational and research activities with the same tools.

About one hundred scientists, from sixteen countries, each with its own specificity in resources and knowledge base, are permanently contributing to the progress of ALADIN NWP system. They are working together on a modern code of the atmosphere that definitely deserves its proper place between the European state-of-the-art NWP models: 80 Full-Time Equivalent persons in the last years of the project’s life. This code is now operated every day in fifteen Euro-Mediterranean countries, on a huge variety of computing platforms ranging from a PC Cluster under Linux to Vector Computers.

ALADIN consortium had a number of unique successes in the past : for instance, the plugging of an existing physics parameterization in the existing code, leading to the AROME model; ALADIN is at the forefront of the gray-zone problematics with the ALARO physics; ALADIN dynamical core is remarkably stable; ...

ALADIN also allowed to build a high-level scientific team, distributed in sixteen countries that managed to reach the level of the best research centres, as witnessed by the PhD theses and publications in international journals. The General Assembly of Partners, the workshops, the meetings, the newsletters regularly offer opportunity of various exchanges within the ALADIN community.

ALADIN is preparing for the serious evolutions expected within the NWP landscape in the coming five to ten years. There is the ever-lasting question where to draw the line between resolved vs. parameterized processes. There is the question of the efficiency and the scalability of ALADIN dynamical core. There are the external drivers, such as the demands of the end users, and the evolution of the high-performance computing machines. Additionally a serious reorganization of the code is now at hand, in particular within the OOPS project. Besides that, the international meteorological context is steadily changing, specifically with the merger of the ALADIN and the HIRLAM consortia.